🤖🤷♂️ Eazy-Kine Fo Fool A.I.-Detection Tools o’ Wot?

⬇️ Pidgin | ⬇️ ⬇️ English

Da pope neva dress up in Balenciaga. An’ da movie makers neva trick us ’bout da moon landing. But in da recent times, super real kine images of dese kine stuff, all made by da artificial intelligence, been flying ’round da internet, making plenny peeps confuse ’bout wot’s real an’ wot’s kapakahi. 🌙💻🌐

Fo’ help us sort through all dis kine pilikia, get choke companies popping up offering services fo’ tell us wot’s pono an’ wot’s shibai. 💼👀

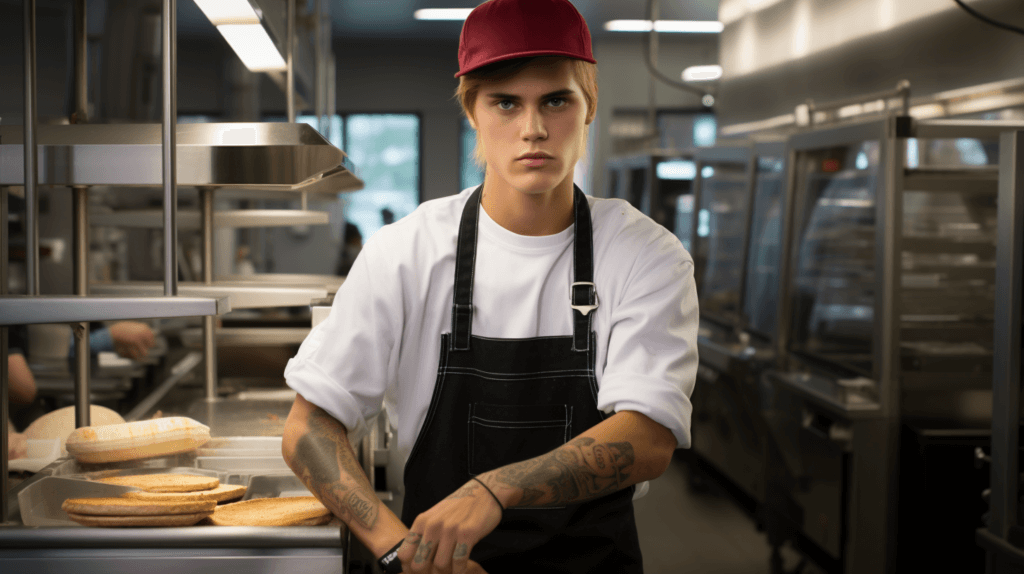

CHECK OUT THESE AI GENERATED PHOTOS

Dere tools go check out da content using all kine smart algorithms, catching da small kine signs to tell da difference ‘tween da images made by computers an’ da ones dat humans wen take or make. But had some big kahunas in da tech world an’ experts on top da fake news say dat da A.I. always going stay one step ahead of da tools. 🖥️🔎🏃♂️

Fo’ check how good da A.I.-detection technology stay, The New York Times wen try out five new services wit’ ova 100 synthetic images an’ real photos. Da results show dat da services getting betta fast, but sometimes dey no can. 📸📈💥

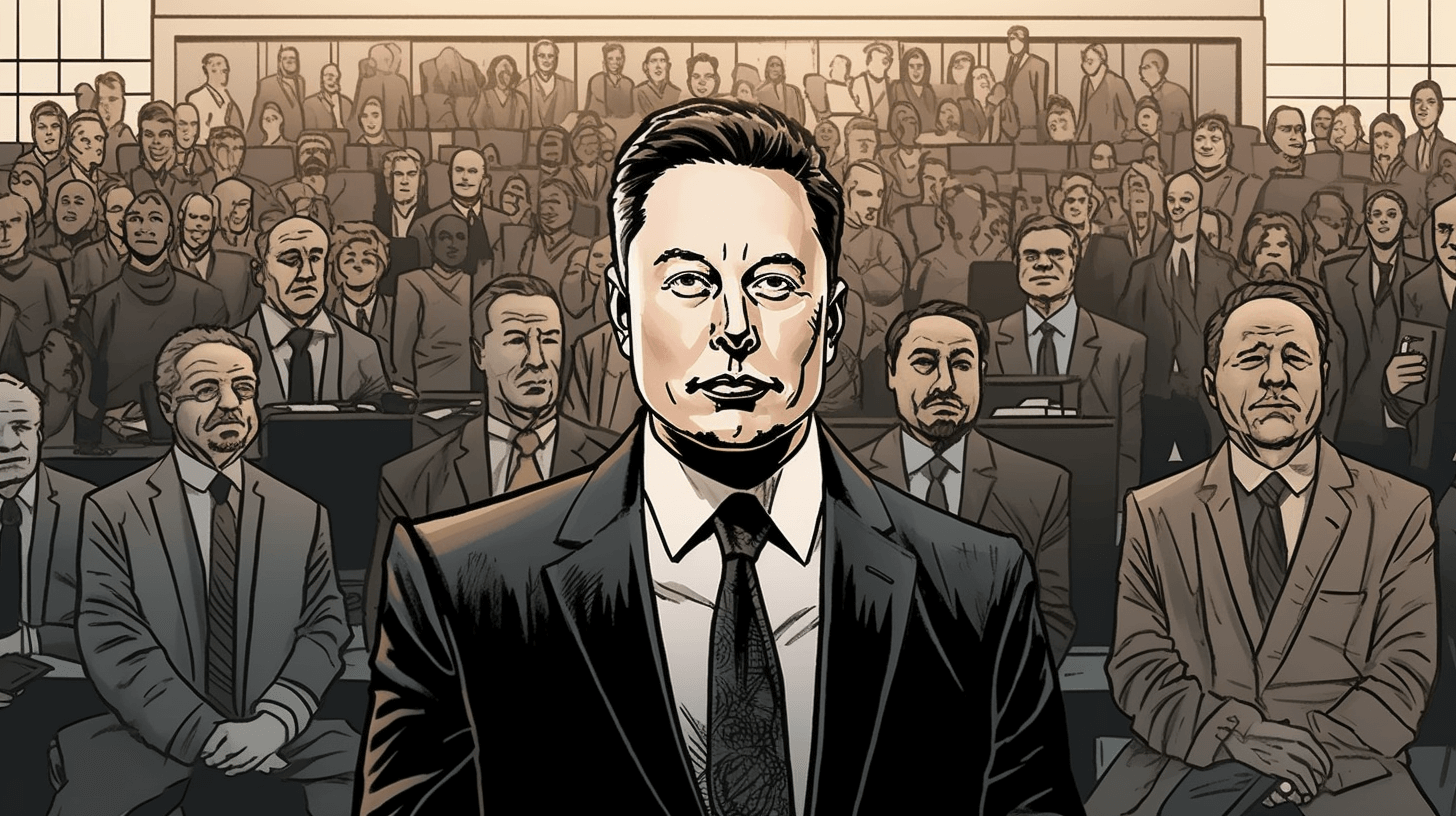

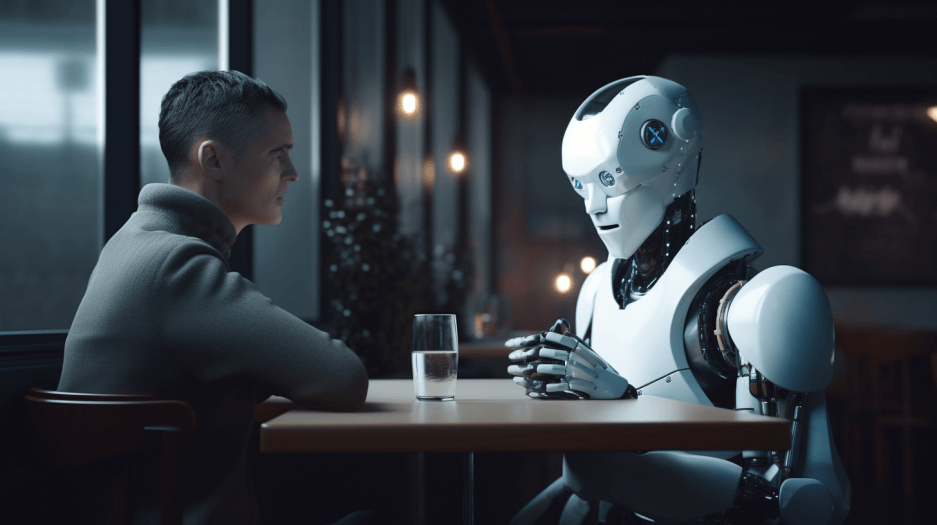

Had dis image dat look like Elon Musk, da billionaire entrepreneur, hugging one robot dat look like one real human. Da image was made by using Midjourney, one A.I. image generator, by Guerrero Art, one artist dat work wit’ A.I. technology. 🤖💰🎨

Even though da image look kind unreal, it wen trick plenny A.I.-image detectors. 🎭🕵️♀️

Dese detectors, including da ones dat charge money fo’ use, like Sensity, an’ da free ones, like Umm-maybe’s A.I. Art Detector, dey made fo’ spot da hard fo’ see markers inside da A.I.-generated images. Dey look fo’ da unusual patterns in how da pixels stay arranged, including in deir sharpness and contrast. Dose kine signs usually come up wen A.I. programs make images. 👁️🔍

But da detectors no mind all da context clues, so dey no acknowledge da fact dat one lifelike robot standing next to Mr. Musk in one photo no make sense. Dis one big problem wit’ relying on dis kine technology fo’ detect fake stuff. 🤷♂️💔

Had choke companies, including Sensity, Hive an’ Inholo, da company behind Illuminarty, no beef da results an’ said their systems always getting betta fo’ keep up wit’ da latest advancements in A.I.-image generation. Hive added dat its misclassifications might happen wen it analyzes lower-quality images. Umm-maybe and Optic, da company behind A.I. or Not, no ansa wen people ask fo’ comment. 🏭📞🙅♂️

Fo’ do da tests, The Times wen collect A.I. images from artists an’ researchers familiar wit’ all kine generative tools like Midjourney, Stable Diffusion an’ DALL-E, which can make real-looking portraits of people an’ animals an’ super real kine depictions of nature, real estate, grindz an’ mo’. Da real images used came from The Times’s photo archive. 🧪🔬🎨

Da detection technology been praised as one way to lessen da damage from A.I. images. But da A.I. experts like Chenhao Tan, one assistant professor of computer science at da University of Chicago an’ da director of its Chicago Human+AI research lab, not so sure ’bout dat. 🧑🎓📚

“In general I no tink dey’re great, and I’m not hopeful dat dey going be,” he said. “In da short term, maybe dey can work wit’ some accuracy, but in da long run, anything special a human does wit’ images, A.I. going be able to do da same kine thing too, an’ it going be super hard fo’ tell da difference.” 🙄💡

Most of da worry been ’bout da lifelike portraits. Gov. Ron DeSantis of Florida, who also one Republican candidate fo’ president, wen get heat afta his campaign wen use A.I.-generated images in one post. Synthetic artwork dat focus on top scenery also wen cause confusion in political races. 🏞️📢

Plenny of da companies behind da A.I. detectors admit dat deir tools no perfect an’ warn ’bout one technological arms race: Da detectors gotta always try catch up to da A.I. systems dat seem like dey getting betta every minute. 🏎️⏰

“Every time somebody build one betta generator, people build betta discriminators, an’ den people use da betta discriminator fo’ build one betta generator,” said Cynthia Rudin, a computer science and engineering professor at Duke University, where she also da principal investigator at the Interpretable Machine Learning Lab. “Da generators stay designed fo’ trick a detector.” 🔄🧱

Sometimes, da detectors no work even when an image obviously fake. Dan Lytle, one artist who work wit’ A.I. and get one TikTok account called The_AI_Experiment, wen ask Midjourney to make one old-time picture of one giant Neanderthal standing among regular men. It wen make dis old kine portrait of one big, Yeti-like beast next to one quaint couple. 👨🎨🦍💔

Da wrong result from each service tested show one problem wit’ da current A.I. detectors: Dey tend to struggle wit’ images dat been changed from deir original output or low quality, according to Kevin Guo, one founder an’ da chief executive of Hive, one image-detection tool. 🧩🔧

Wen A.I. generators like Midjourney make photorealistic artwork, dey pack da image wit’ millions of pixels, each one get clues ’bout where it come from. “But if you twist it, if you resize it, lower da resolution, all dat kine stuff, you changing doze pixels an’ dat extra digital signal is going away,” Mr. Guo said. 🎆💡

Wen Hive, fo’ example, ran a higher-resolution version of da Yeti artwork, it wen figure out right dat da image was A.I.-generated. 🖼️🔍

Dese kine shortfalls can undermine da potential fo’ A.I. detectors to become one weapon against fake content. As images go viral online, dey often get copied, resaved, made smaller or cropped, hiding da important signals dat A.I. detectors rely on. One new tool from Adobe Photoshop, known as generative fill, uses A.I. fo’ make one photo bigger than da original size. (Wen test ’em wit’ one picture dat was made bigger using generative fill, da technology confuse most detection services.) 📷🌐❗

Da oddah portrait below show President Biden, an’ da resolution mo’ bettah. It was taken in Gettysburg, Pa., by Damon Winter, da photographer fo’ The Times. 📸👔

Plenny of da detectors wen guess right dat da portrait was legit, but no all of ’em. 🧐🚫

It’s one significant risk fo’ A.I. detectors fo’ wrongly label one real image as A.I.-generated. Sensity wen can tell most A.I. images was fake. But dat same tool wen label plenny real photographs as A.I.-generated wen dey wasn’t. 📛✖️📸

Dese risks can affect da artists too, cuz dey might get accused wrongly of using A.I. tools in deir artwork. 🎨👩🎨❌

Dis Jackson Pollock painting, called “Convergence,” get da artist’s usual colorful paint splatters. Most – but not all – da A.I. detectors wen decide dis one was real an’ not one A.I.-generated copy. Illuminarty’s creators wen say dey like make one detector dat can identify fake artwork, like paintings an’ drawings. 🎨🔍🧑🎨

In da tests, Illuminarty wen assess most real photos as legit, but only about half of da A.I. images as artificial. Da tool, creators say, stay designed fo’ be extra careful an’ no falsely accuse artists of using A.I. 🖌️✅🚫

Illuminarty’s tool, along wit’ most oddah detectors, wen figure out one similar image in Pollock’s style dat The New York Times made using Midjourney. 💥📝🖼️

Da A.I.-detection companies say deir services stay made fo’ help bring transparency an’ accountability, an’ fo’ help spot fake stuff, fraud, revenge porn, dishonest artwork, an’ oddah misuses of da technology. But da experts say dat financial markets an’ voters could become vulnerable to A.I. trickery. 💸🗳️🤖

Dis image, in da style of one black-an’-white portrait, look pretty convincing. It was made wit’ Midjourney by Marc Fibbens, one artist from New Zealand dat work wit’ A.I. Most of da A.I. detectors still wen guess right dat it was fake. 🎭🤷♂️

But da detectors wen struggle when jus’ one li’l bit of grain wen come in. Suddenly, detectors like Hive wen believe dat da fake images was real photos. Da small texture, dat was hard fo’ see, wen mess wit’ da ability fo’ analyze da pixels an’ find signs of A.I.-generated stuff. Some companies now trying fo’ find da use of A.I. in images by looking at da perspective or da size of da subjects’ limbs, on top scrutinizing da pixels. 🤔📊🔍

Da artificial intelligence not only can make realistic images – da technology stay already creating text, audio, an’ videos dat wen fool professors, trick people fo’ money, an’ been used in try fo’ change da outcome of wars. 📚💰📺🤯

A.I.-detection tools no should be da only defense, researchers say. Da peeps who create images should put watermarks in deir work, said S. Shyam Sundar, da director of da Center fo’ Socially Responsible Artificial Intelligence at Pennsylvania State University. Websites could also incorporate detection tools into deir systems, so dey can automatically find A.I. images an’ show ’em cautiously to users wit’ warnings an’ restrictions on how dey can be shared. 🚫💦🌐

Images get one big power, Mr. Sundar said, cuz dey “get dat power fo’ make people react strongly. Peeps mo’ likely fo’ believe wot dey see wit’ deir own eyes.” 🤩👁️👍

NOW IN ENGLISH

🤖🤷♂️ How Easy Is It to Fool A.I.-Detection Tools, or What?

The pope did not wear Balenciaga. And the movie makers did not deceive us about the moon landing. But in recent times, highly realistic images of these subjects, all created by artificial intelligence, have been circulating on the internet, causing confusion about what’s real and what’s fake. 🌙💻🌐

To help us navigate through this confusion, several companies have emerged offering services to determine the authenticity of images. 💼👀

These tools analyze the content using advanced algorithms, identifying subtle cues to distinguish between computer-generated and human-captured or created images. However, some prominent figures in the tech industry and experts in the field of misinformation have expressed concerns that AI will always stay a step ahead of these detection tools. 🖥️🔎🏃♂️

To evaluate the effectiveness of AI detection technology, The New York Times conducted tests on five new services using over 100 synthetic and real images. The results indicate that while these services are improving rapidly, they still have some limitations. 📸📈💥

One image, for instance, depicted Elon Musk, the billionaire entrepreneur, embracing a lifelike robot. This image was generated using Midjourney, an AI image generator, by Guerrero Art, an artist working with AI technology. 🤖💰🎨

Despite the implausible nature of the image, it managed to deceive several AI image detectors. 🎭🕵️♀️

These detectors, including both paid versions like Sensity and free ones like Umm-maybe’s AI Art Detector, are designed to detect hidden markers within AI-generated images. They analyze unusual patterns in pixel arrangement, such as sharpness and contrast, which are often indicative of AI-generated content. 👁️🔍

However, these detectors lack the ability to consider contextual clues. They do not take into account the unlikelihood of a lifelike automaton appearing alongside Mr. Musk in a photograph. This limitation poses a challenge to relying solely on this technology for fake image detection. 🤷♂️💔

Several companies, including Sensity, Hive, and Inholo, the company behind Illuminarty, acknowledged the results and stated that their systems are constantly improving to keep up with advancements in AI image generation. Hive mentioned that misclassifications may occur when analyzing lower-quality images, while Umm-maybe and Optic, the company behind AI or Not, did not respond to requests for comment. 🏭📞🙅♂️

To conduct the tests, The Times gathered AI images from artists and researchers familiar with various generative tools like Midjourney, Stable Diffusion, and DALL-E, which can create realistic portraits of people and animals, as well as lifelike depictions of nature, real estate, and more. The real images used were sourced from The Times’s photo archive. 🧪🔬🎨

AI image detection technology has been hailed as a means to mitigate the harm caused by AI-generated images. However, AI experts like Chenhao Tan, an assistant professor of computer science at the University of Chicago and the director of its Chicago Human+AI research lab, are skeptical. 🧑🎓📚

“In general, I don’t think they’re great, and I’m not optimistic that they will be,” he said. “In the short term, they may perform with some accuracy, but in the long run, AI will be able to recreate anything special that a human does with images, making it very difficult to distinguish the difference.” 🙄💡

Most of the concerns surrounding AI-generated images center around lifelike portraits. Governor Ron DeSantis of Florida, who is also a Republican candidate for president, faced criticism when his campaign used AI-generated images in a post. Synthetic artwork focusing on landscapes has also caused confusion in political campaigns. 🏞️📢

Many of the companies behind AI detectors acknowledge the imperfections of their tools and warn about a technological arms race. The detectors are constantly playing catch-up to AI systems that are improving at a rapid pace. 🏎️⏰

“Every time somebody develops a better generator, others develop better discriminators, and then those improved discriminators are used to create even better generators,” said Cynthia Rudin, a computer science and engineering professor at Duke University, also the principal investigator at the Interpretable Machine Learning Lab. “The generators are designed to fool the detectors.” 🔄🧱

Sometimes, the detectors fail even when the image is obviously fake. For example, when asked to create an old-fashioned picture of a giant Neanderthal among regular men, AI artist Dan Lytle produced an image of a Yeti-like creature next to a quaint couple. 👨🎨🦍💔

The incorrect results from the tested services highlight a limitation of current AI detectors—they often struggle with images that have been altered or are of low quality. Kevin Guo, the founder and CEO of Hive, an image detection tool, explained that when AI generators create photorealistic artwork, they encode millions of pixels with information about their origins. However, if these images are distorted, resized, or have their resolution lowered, the additional digital signals are lost. 🧩🔧

For example, when Hive analyzed a higher-resolution version of the Yeti artwork, it correctly identified it as AI-generated. Such limitations undermine the potential of AI detectors as weapons against fake content. As images spread virally online, they are often modified, resized, or cropped, which obscures the important signals that AI detectors rely on. Adobe Photoshop’s generative fill, a new tool that uses AI to enlarge photos beyond their original size, was found to confuse most detection services when tested on an expanded photograph. 📷🌐❗

Another portrait, featuring President Biden, had higher resolution and was captured by Damon Winter, the photographer for The Times, in Gettysburg, PA. While most detectors correctly identified it as genuine, some did not. 🧐🚫

Mislabeling a genuine image as AI-generated is a significant risk associated with AI detectors. Sensity managed to correctly label most AI images as artificial, but it mistakenly labeled many real photographs as AI-generated. These risks could also impact artists, who may be falsely accused of using AI tools in their artwork. 📛✖️📸

In the case of a Jackson Pollock painting called “Convergence,” which features the artist’s distinctive splatters, most, but not all, AI detectors correctly identified it as real rather than AI-generated. Illuminarty, whose creators aimed to develop a detector for identifying fake artwork like paintings and drawings, accurately assessed most real photos as authentic while being cautious in labeling AI images. 🎨🔍🧑🎨

Illuminarty’s tool, along with other detectors, correctly identified a similar Pollock-style image created by The New York Times using Midjourney. 💥📝🖼️

AI detection companies claim that their services promote transparency and accountability, helping to detect fake content, fraud, nonconsensual pornography, artistic dishonesty, and other misuses of the technology. However, experts warn that financial markets and voters could become vulnerable to AI trickery. 💸🗳️🤖

Even though an image in the style of a black-and-white portrait may appear convincing, most AI detectors managed to identify it as fake. However, when a subtle grain was added, detectors like Hive mistakenly believed the fake images to be real photos. The imperceptible texture interfered with the analysis of pixels, making it difficult to detect AI-generated content. Some companies are now exploring alternative approaches, such as evaluating perspective or subject limb size, in addition to scrutinizing pixels, to identify the use of AI in images. 🤔📊🔍

Artificial intelligence is not only capable of generating realistic images but also text, audio, and videos that have deceived professors, scammed individuals, and even been used in attempts to influence warfare outcomes. 📚💰📺🤯

According to researchers, AI detection tools should not be the sole defense against fake content. Image creators are advised to embed watermarks in their work. S. Shyam Sundar, the director of the Center for Socially Responsible Artificial Intelligence at Pennsylvania State University, suggests that websites incorporate detection tools into their platforms to automatically identify and caution users about AI images, imposing restrictions on their sharing. 🚫💦🌐

Images hold immense power, as Mr. Sundar explains, “They have the ability to evoke a visceral response. People are much more likely to believe what they see with their own eyes.” 🤩👁️👍